#Requestanimationframe react native

Explore tagged Tumblr posts

Text

How to Improve Performance of Your React Native App?

Some well-known global companies like Facebook, Instagram, Walmart, and thousands of startups get them built using React Native. React native mobile applications more efficiently and use of hardware resources optimally.

Also, the React Native allows you to share and reuse code between Android and iOS apps. Reacting native also has a large developer community that supports it. If you also get your application is built using the react native, then let's look at the most effective way to improve Reacting native mobile applications development.

1. Identify and Prevent Memory Leaks

A memory leak has always been a significant issue with your Android device. Often some unwanted processes running in the background. Unwanted processes can cause memory leaks in your application. In the memory leak problem, the application is closed but the memory is not released, and thus in a future release, additional memory is allocated.

To prevent the memory leak problem, we recommend that you avoid using List View. You can use other components such as FlatList list, SectionList, or VirtualList. By using the component listing will not cause memory leaks, provide smooth scrolling, and increase the overall performance and quality of your mobile application.

2. Reduce Application Size

Most of the React native applications built with JavaScript will use Native components as well as some third-party libraries. This will decrease the size of the application. The higher the number of third-party components and libraries that are used; the large size of the application. Reducing the size of the application will reduce the download time and improve application performance thanks to reduced code.

To reduce the size of the application you're building, use only essential components and optimizing all the components used. Regardless of the components, it will help if you also focus on reducing the size of graphics and graphic elements.

You can use the ProGuard to optimize your code as much as possible. A mid-range device usually responds better for smaller applications. Flagship devices generally provide much higher performance in smaller applications. Additionally, we do not recommend you to use the main thread to pass components of the relay on a large message queue.

3. Use caution Rendering

Rendering redundant components can have a negative impact on the performance of your large enough React Native application. To prevent such scenarios, do not integrate the different countries of the life cycle and props.

It is important to ensure that conciliation is not overloaded with unnecessary work. This can reduce this JavaScript thread frame per second output, and the user may feel that the application is slow. To prevent unnecessary rendering, use Component Update function only when required.

4. Optimize Images

Images utilize an important part of the memory. To further improve your performance by React Native application, you need to optimize the size of your mobile application. You can use services such as TinyPNG and optimize the picture before you start to use these images in your mobile app.

If you use a JPG or PNG then we recommend the use Web Application format. We encourage the use of Web App because it can increase application loading speeds of up to 28%, reducing the Android and iOS binary size by 25%. It also reduces the bundle CodePush by 66%.

5. Simplify JSON Data

Many native mobile applications pull data from a remote server using private or public API. This data in JSON format and may contain compound nested objects.

App developers and programmers tend to keep all local JSON data so that they can access and retrieve it anytime because JavaScript renders the data in stages. This causes performance bottlenecks. Therefore, we recommend you to change the raw JSON into a simple object before displaying to avoid the problem.

6. Optimize App Launch Time

If your application launch time is too high, then users will not choose to use the old application. One of the elements that affect the launch time object. Finalize. It has been regarded as one of the fundamental reasons for the higher application launch time.

Finalizes run on the main thread and can cause an error message. These messages indicate that there is not enough memory available when there is enough available RAM. It is one of the main reasons for launching the application slower. To prevent such problems, avoid using Finalizes Object, and not run a significant component in the main thread.

Native mobile app is the way to go!

If you want to offer your users an application that has more reliable performance, greater stability, and reliability, then you should go with the React Native App Development. The react native is a popular JavaScript library for developing cross-platform applications.

However, if not implemented carefully, it can cause certain problems. However, once you are familiar with the technical field, you will have a better understanding of how you can resolve the issue.

Finding A Reliable Company To Ask Mobile App Development Services?

Working with a company experienced React Native application development is the only way to ensure that there are no obstacles in your mobile application development projects. We are one of the top Mobile App Development Companies in India. Apart from the React native Android application development services, we also offer application development and custom web development services iPhone in India and the United States.

Why did you choose Fusion Informatics?

At Fusion Informatics, we believe in delivering value and growth for our clients. Fusion Informatics provides Mobile Application, Android and Top React Native App Development Companies. Our team provides world-class products due to technical competence and creativity. Connect with us to create a mobile application you great success!

To know more info –

React Native App Development Company Bangalore

React Native App Development Company Mumbai

Artificial Intelligence Development Company

Blockchain App Development Companies

Chatbot App Development Company

#React native performance profiling#React native flatlist performance#React native image performance#React native screens performance#React navigation performance#Requestanimationframe react native#React native memory leak#Speeding up react native app

0 notes

Photo

Underscore.js, React without virtual DOM, and why you should use Svelte

#504 — September 4, 2020

Unsubscribe | Read on the Web

JavaScript Weekly

Underscore.js 1.11.0: The Long Standing Functional Helper Library Goes Modular — “Underscore!?” I hear some of our longer serving readings exclaiming. Yes, it’s still around, still under active development, and still a neat project at a mere 11 years old. As of v1.11.0 every function is now in a separate module which brings treeshaking opportunities to all, but there’s also a monolithic bundle in ES module format for those who prefer that. This article goes into a lot more depth about the new modular aspects.

Jeremy Ashkenas

Mastering the Hard Parts of JavaScript — A currently 17-part blog post series written by someone taking Frontend Masters’ JavaScript: The Hard Parts course and reflecting on the exercises that have helped them learn about callbacks, prototypes, closures, and more.

Ryan Ameri

FusionAuth Now Offers Breached Password Detection and LDAP — FusionAuth is a complete identity and access management tool that saves your team time and resources. Implement complex standards like OAuth, OpenID Connect, and SAML and build out additional login features to meet compliance requirements.

FusionAuth sponsor

How Browsers May Throttle requestAnimationFrame — requestAnimationFrame is a browser API that allows code execution to be triggered before the next available frame on the device display, but it’s not a guarantee and it can be throttled. This post looks at when and why.

Matt Perry

Brahmos: Think React, But Without the VDOM — An intriguing user interface library that supports the modern React API and native templates but with no VDOM.

Brahmos

NativeScript 7.0: Create Native iOS and Android Apps with JS — A signficant step forward for the framework by aligning with modern JS standards and bringing broad consistency across the whole stack. Supports Angular, Vue, and you can even use TypeScript if you prefer.

NativeScript

⚡️ Quick bytes:

🎧 The Real Talk JavaScript podcast interviewed Rich Harris of the Svelte project – well worth a listen if you want to get up to speed with why you should be paying attention to Svelte.

ESLint now has a public roadmap of what they're working on next.

You've got nine more days to develop a game for the current JS13kGames competition, if you're up for it.

VueConfTO (VueConf Toronto) are running a free virtual Vue.js conference this November.

The latest on webpack 5's release plans. Expect a final release in October.

💻 Jobs

Senior JavaScript Developer (Warsaw, Relocation Package) — Open source rich text editor used by millions of users around the world. Strong focus on code quality. Join us.

CKEDITOR

JavaScript Developer at X-Team (Remote) — Join the most energizing community for developers and work on projects for Riot Games, FOX, Sony, Coinbase, and more.

X-Team

Find a Job Through Vettery — Create a profile on Vettery to connect with hiring managers at startups and Fortune 500 companies. It's free for job-seekers.

Vettery

📚 Tutorials, Opinions and Stories

Designing a JavaScript Plugin System — jQuery has plugins. Gatsby, Eleventy, and Vue do, too. Plugins are a common way to extend the functionality of other tools and libraries and you can roll your own plugin approach too.

Bryan Braun

▶ Making WAVs: Understanding, Parsing, and Creating Wave Files — If you’ve not watched any of the Low Level JavaScript videos yet, you’re missing a treat. But this is a good place to start, particularly if the topic of working with a data format at a low level appeals to you.

Low Level JavaScript

Breakpoints and console.log Is the Past, Time Travel Is the Future — 15x faster JavaScript debugging than with breakpoints and console.log.

Wallaby.js sponsor

The New Logical Assignment Operators in JavaScript — Logical assignment operators combine logical operators (e.g. ||) and assignment expressions. They're currently at stage 4.

Hemanth HM

Eight Methods to Search Through JavaScript Arrays

Joel Thoms

TypeScript 4.0: What I’m Most Excited About — Fernando seems particularly enthused about the latest version of TypeScript!

Fernando Doglio

Machine Learning for JavaScript Devs in 10 Minutes — Covers the absolute basics but puts you in a position to move on elsewhere.

Allan Chua

How to Refactor a Shopify Site for JavaScript Performance

Shopify Partners sponsor

'TypeScript is Weakening the JavaScript Ecosystem' — Controversial opinion alert, but we need to balance out the TypeScript love sometime.

Tim Daubenschütz

▶ Why I’m Using Next.js in 2020 — Lee makes the bold claim that he thinks “the future of React is actually Next.js”.

Lee Robinson

Building a Component Library with React and Emotion

Ademola Adegbuyi

Tackling TypeScript: Upgrading from JavaScript — You’ll know Dr. Axel from Deep JavaScript and JavaScript for Impatient Programmers.. well now he’s tackling TypeScript and you can read the first 11 chapters online.

Dr. Axel Rauschmayer

Introducing Modular Underscore — Just in case you missed it in the top feature of this issue ;-)

Julian Gonggrijp

🔧 Code & Tools

CindyJS: A Framework to Create Interactive Math Content for the Web — For visualizing and playing with mathematical concepts with things like mass, springs, fields, trees, etc. Lots of live examples here. The optics simulation is quite neat to play with.

CindyJS Team

Print.js: An Improved Way to Print From Your Apps and Pages — Let’s say you have a PDF file that would be better to print than the current Web page.. Print.js makes it easy to add a button to a page so users can print that PDF directly. You can also print specific elements off of the current page.

Crabbly

AppSignal Is All About Automatic Instrumentation and Ease of Use — AppSignal provides you with automatic instrumentation for Apollo, PostgreSQL, Redis, and Next.js. Try us out for free.

AppSignal sponsor

Volt: A Bootstrap 5 Admin Dashboard Using Only Vanilla JS — See a live preview here. Includes 11 example pages, 100+ components, and some plugins with no dependencies.

Themesberg

Stencil 2.0: A Web Component Compiler for Building Reusable UI Components — Stencil is a toolchain for building reusable, scalable design systems. And while this is version 2.0, there are few breaking changes.

Ionic

NgRx 10 Released: Reactive State for Angular

ngrx

🆕 Quick releases:

Ember 3.21

Terser 5.3 — JS parser, mangler and compressor toolkit.

Cypress 5.1 — Fast, reliable testing for anything that runs in a browser.

jqGrid 5.5 — jQuery grid plugin.

np 6.5 — A better npm publish

underscore 1.11.0 — JS functional helpers library.

by via JavaScript Weekly https://ift.tt/3i0cc0z

0 notes

Text

Everything You Need to Know About FLIP Animations in React

With a very recent Safari update, Web Animations API (WAAPI) is now supported without a flag in all modern browsers (except IE). Here’s a handy Pen where you can check which features your browser supports. The WAAPI is a nice way to do animation (that needs to be done in JavaScript) because it’s native — meaning it requires no additional libraries to work. If you’re completely new to WAAPI, here’s a very good introduction by Dan Wilson.

One of the most efficient approaches to animation is FLIP. FLIP requires a bit of JavaScript to do its thing.

Let’s take a look at the intersection of using the WAAPI, FLIP, and integrating all that into React. But we’ll start without React first, then get to that.

FLIP and WAAPI

FLIP animations are made much easier by the WAAPI!

Quick refresher on FLIP: The big idea is that you position the element where you want it to end up first. Next, apply transforms to move it to the starting position. Then unapply those transforms.

Animating transforms is super efficient, thus FLIP is super efficient. Before WAAPI, we had to directly manipulate element’s styles to set transforms and wait for the next frame to unset/invert it:

// FLIP Before the WAAPI el.style.transform = `translateY(200px)`;

requestAnimationFrame(() => { el.style.transform = ''; });

A lot of libraries are built upon this approach. However, there are several problems with this:

Everything feels like a huge hack.

It is extremely difficult to reverse the FLIP animation. While CSS transforms are reversed “for free” once a class is removed, this is not the case here. Starting a new FLIP while a previous one is running can cause glitches. Reversing requires parsing a transform matrix with getComputedStyles and using it to calculate the current dimensions before setting a new animation.

Advanced animations are close to impossible. For example, to prevent distorting a scaled parent’s children, we need to have access to current scale value each frame. This can only be done by parsing the transform matrix.

There’s lots of browser gotchas. For example, sometimes getting a FLIP animation to work flawlessly in Firefox requires calling requestAnimationFrame twice:

requestAnimationFrame(() => { requestAnimationFrame(() => { el.style.transform = ''; }); });

We get none of these problems when WAAPI is used. Reversing can be painlessly done with the reverse function.The counter-scaling of children is also possible. And when there is a bug, it is easy to pinpoint the exact culprit since we’re only working with simple functions, like animate and reverse, rather than combing through things like the requestAnimationFrame approach.

Here’s the outline of the WAAPI version:

el.classList.toggle('someclass'); const keyframes = /* Calculate the size/position diff */; el.animate(keyframes, 2000);

FLIP and React

To understand how FLIP animations work in React, it is important to know how and, most importantly, why they work in plain JavaScript. Recall the anatomy of a FLIP animation:

Everything that has a purple background needs to happen before the “paint” step of rendering. Otherwise, we would see a flash of new styles for a moment which is not good. Things get a little bit more complicated in React since all DOM updates are done for us.

The magic of FLIP animations is that an element is transformed before the browser has a chance to paint. So how do we know the “before paint” moment in React?

Meet the useLayoutEffect hook. If you even wondered what is for… this is it! Anything we pass in this callback happens synchronously after DOM updates but before paint. In other words, this is a great place to set up a FLIP!

Let us do something the FLIP technique is very good for: animating the DOM position. There’s nothing CSS can do if we want to animate how an element moves from one DOM position to another. (Imagine completing a task in a to-do list and moving it to the list of “completed” tasks like when you click on items in the Pen below.)

CodePen Embed Fallback

Let’s look at the simplest example. Clicking on any of the two squares in the following Pen makes them swap positions. Without FLIP, it would happen instantly.

CodePen Embed Fallback

There’s a lot going on there. Notice how all work happens inside lifecycle hook callbacks: useEffect and useLayoutEffect. What makes it a little bit confusing is that the timeline of our FLIP animation is not obvious from code alone since it happens across two React renders. Here’s the anatomy of a React FLIP animation to show the different order of operations:

Although useEffect always runs after useLayoutEffect and after browser paint, it is important that we cache the element’s position and size after the first render. We won’t get a chance to do it on second render because useLayoutEffect is run after all DOM updates. But the procedure is essentially the same as with vanilla FLIP animations.

Caveats

Like most things, there are some caveats to consider when working with FLIP in React.

Keep it under 100ms

A FLIP animation is calculation. Calculation takes time and before you can show that smooth 60fps transform you need to do quite some work. People won’t notice a delay if it is under 100ms, so make sure everything is below that. The Performance tab in DevTools is a good place to check that.

Unnecessary renders

We can’t use useState for caching size, positions and animation objects because every setState will cause an unnecessary render and slow down the app. It can even cause bugs in the worst of cases. Try using useRef instead and think of it as an object that can be mutated without rendering anything.

Layout thrashing

Avoid repeatedly triggering browser layout. In the context of FLIP animations, that means avoid looping through elements and reading their position with getBoundingClientRect, then immediately animating them with animate. Batch “reads” and “writes” whenever possible. This will allow for extremely smooth animations.

Animation canceling

Try randomly clicking on the squares in the earlier demo while they move, then again after they stop. You will see glitches. In real life, users will interact with elements while they move, so it’s worth making sure they are canceled, paused, and updated smoothly.

However, not all animations can be reversed with reverse. Sometimes, we want them to stop and then move to a new position (like when randomly shuffling a list of elements). In this case, we need to:

obtain a size/position of a moving element

finish the current animation

calculate the new size and position differences

start a new animation

In React, this can be harder than it seems. I wasted a lot of time struggling with it. The current animation object must be cached. A good way to do it is to create a Map so to get the animation by an ID. Then, we need to obtain the size and position of the moving element. There are two ways to do it:

Use a function component: Simply loop through every animated element right in the body of the function and cache the current positions.

Use a class component: Use the getSnapshotBeforeUpdate lifecycle method.

In fact, official React docs recommend using getSnapshotBeforeUpdate “because there may be delays between the “render” phase lifecycles (like render) and “commit” phase lifecycles (like getSnapshotBeforeUpdate and componentDidUpdate).” However, there is no hook counterpart of this method yet. I found that using the body of the function component is fine enough.

Don’t fight the browser

I’ve said it before, but avoid fighting the browser and try to make things happen the way the browser would do it. If we need to animate a simple size change, then consider whether CSS would suffice (e.g. transform: scale()) . I’ve found that FLIP animations are used best where browsers really can’t help:

Animating DOM position change (as we did above)

Sharing layout animations

The second is a more complicated version of the first. There are two DOM elements that act and look as one changing its position (while another is unmounted/hidden). This tricks enables some cool animations. For example, this animation is made with a library I built called react-easy-flip that uses this approach:

CodePen Embed Fallback

Libraries

There are quite a few libraries that make FLIP animations in React easier and abstract the boilerplate. Ones that are currently maintained actively include: react-flip-toolkit and mine, react-easy-flip.

If you do not mind something heavier but capable of more general animations, check out framer-motion. It also does cool shared layout animations! There is a video digging into that library.

Resources and references

Animating the Unanimatable by Josh W. Comeau

Build performant expand & collapse animations by Paul Lewis and Stephen McGruer

The Magic Inside Magic Motion by Matt Perry

Using animate CSS variables from JavaScript, tweeted by @keyframers

Inside look at modern web browser (part 3) by Mariko Kosaka

Building a Complex UI Animation in React, Simply by Alex Holachek

Animating Layouts with the FLIP Technique by David Khourshid

Smooth animations with React Hooks, again by Kirill Vasiltsov

Shared element transition with React Hooks by Jayant Bhawal

The post Everything You Need to Know About FLIP Animations in React appeared first on CSS-Tricks.

Everything You Need to Know About FLIP Animations in React published first on https://deskbysnafu.tumblr.com/

0 notes

Text

Everything You Need to Know About FLIP Animations in React

With a very recent Safari update, Web Animations API (WAAPI) is now supported without a flag in all modern browsers (except IE). Here’s a handy Pen where you can check which features your browser supports. The WAAPI is a nice way to do animation (that needs to be done in JavaScript) because it’s native — meaning it requires no additional libraries to work. If you’re completely new to WAAPI, here’s a very good introduction by Dan Wilson.

One of the most efficient approaches to animation is FLIP. FLIP requires a bit of JavaScript to do its thing.

Let’s take a look at the intersection of using the WAAPI, FLIP, and integrating all that into React. But we’ll start without React first, then get to that.

FLIP and WAAPI

FLIP animations are made much easier by the WAAPI!

Quick refresher on FLIP: The big idea is that you position the element where you want it to end up first. Next, apply transforms to move it to the starting position. Then unapply those transforms.

Animating transforms is super efficient, thus FLIP is super efficient. Before WAAPI, we had to directly manipulate element’s styles to set transforms and wait for the next frame to unset/invert it:

// FLIP Before the WAAPI el.style.transform = `translateY(200px)`;

requestAnimationFrame(() => { el.style.transform = ''; });

A lot of libraries are built upon this approach. However, there are several problems with this:

Everything feels like a huge hack.

It is extremely difficult to reverse the FLIP animation. While CSS transforms are reversed “for free” once a class is removed, this is not the case here. Starting a new FLIP while a previous one is running can cause glitches. Reversing requires parsing a transform matrix with getComputedStyles and using it to calculate the current dimensions before setting a new animation.

Advanced animations are close to impossible. For example, to prevent distorting a scaled parent’s children, we need to have access to current scale value each frame. This can only be done by parsing the transform matrix.

There’s lots of browser gotchas. For example, sometimes getting a FLIP animation to work flawlessly in Firefox requires calling requestAnimationFrame twice:

requestAnimationFrame(() => { requestAnimationFrame(() => { el.style.transform = ''; }); });

We get none of these problems when WAAPI is used. Reversing can be painlessly done with the reverse function.The counter-scaling of children is also possible. And when there is a bug, it is easy to pinpoint the exact culprit since we’re only working with simple functions, like animate and reverse, rather than combing through things like the requestAnimationFrame approach.

Here’s the outline of the WAAPI version:

el.classList.toggle('someclass'); const keyframes = /* Calculate the size/position diff */; el.animate(keyframes, 2000);

FLIP and React

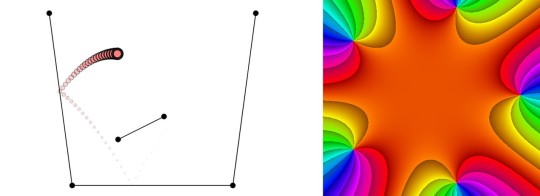

To understand how FLIP animations work in React, it is important to know how and, most importantly, why they work in plain JavaScript. Recall the anatomy of a FLIP animation:

Everything that has a purple background needs to happen before the “paint” step of rendering. Otherwise, we would see a flash of new styles for a moment which is not good. Things get a little bit more complicated in React since all DOM updates are done for us.

The magic of FLIP animations is that an element is transformed before the browser has a chance to paint. So how do we know the “before paint” moment in React?

Meet the useLayoutEffect hook. If you even wondered what is for… this is it! Anything we pass in this callback happens synchronously after DOM updates but before paint. In other words, this is a great place to set up a FLIP!

Let us do something the FLIP technique is very good for: animating the DOM position. There’s nothing CSS can do if we want to animate how an element moves from one DOM position to another. (Imagine completing a task in a to-do list and moving it to the list of “completed” tasks like when you click on items in the Pen below.)

CodePen Embed Fallback

Let’s look at the simplest example. Clicking on any of the two squares in the following Pen makes them swap positions. Without FLIP, it would happen instantly.

CodePen Embed Fallback

There’s a lot going on there. Notice how all work happens inside lifecycle hook callbacks: useEffect and useLayoutEffect. What makes it a little bit confusing is that the timeline of our FLIP animation is not obvious from code alone since it happens across two React renders. Here’s the anatomy of a React FLIP animation to show the different order of operations:

Although useEffect always runs after useLayoutEffect and after browser paint, it is important that we cache the element’s position and size after the first render. We won’t get a chance to do it on second render because useLayoutEffect is run after all DOM updates. But the procedure is essentially the same as with vanilla FLIP animations.

Caveats

Like most things, there are some caveats to consider when working with FLIP in React.

Keep it under 100ms

A FLIP animation is calculation. Calculation takes time and before you can show that smooth 60fps transform you need to do quite some work. People won’t notice a delay if it is under 100ms, so make sure everything is below that. The Performance tab in DevTools is a good place to check that.

Unnecessary renders

We can’t use useState for caching size, positions and animation objects because every setState will cause an unnecessary render and slow down the app. It can even cause bugs in the worst of cases. Try using useRef instead and think of it as an object that can be mutated without rendering anything.

Layout thrashing

Avoid repeatedly triggering browser layout. In the context of FLIP animations, that means avoid looping through elements and reading their position with getBoundingClientRect, then immediately animating them with animate. Batch “reads” and “writes” whenever possible. This will allow for extremely smooth animations.

Animation canceling

Try randomly clicking on the squares in the earlier demo while they move, then again after they stop. You will see glitches. In real life, users will interact with elements while they move, so it’s worth making sure they are canceled, paused, and updated smoothly.

However, not all animations can be reversed with reverse. Sometimes, we want them to stop and then move to a new position (like when randomly shuffling a list of elements). In this case, we need to:

obtain a size/position of a moving element

finish the current animation

calculate the new size and position differences

start a new animation

In React, this can be harder than it seems. I wasted a lot of time struggling with it. The current animation object must be cached. A good way to do it is to create a Map so to get the animation by an ID. Then, we need to obtain the size and position of the moving element. There are two ways to do it:

Use a function component: Simply loop through every animated element right in the body of the function and cache the current positions.

Use a class component: Use the getSnapshotBeforeUpdate lifecycle method.

In fact, official React docs recommend using getSnapshotBeforeUpdate “because there may be delays between the “render” phase lifecycles (like render) and “commit” phase lifecycles (like getSnapshotBeforeUpdate and componentDidUpdate).” However, there is no hook counterpart of this method yet. I found that using the body of the function component is fine enough.

Don’t fight the browser

I’ve said it before, but avoid fighting the browser and try to make things happen the way the browser would do it. If we need to animate a simple size change, then consider whether CSS would suffice (e.g. transform: scale()) . I’ve found that FLIP animations are used best where browsers really can’t help:

Animating DOM position change (as we did above)

Sharing layout animations

The second is a more complicated version of the first. There are two DOM elements that act and look as one changing its position (while another is unmounted/hidden). This tricks enables some cool animations. For example, this animation is made with a library I built called react-easy-flip that uses this approach:

CodePen Embed Fallback

Libraries

There are quite a few libraries that make FLIP animations in React easier and abstract the boilerplate. Ones that are currently maintained actively include: react-flip-toolkit and mine, react-easy-flip.

If you do not mind something heavier but capable of more general animations, check out framer-motion. It also does cool shared layout animations! There is a video digging into that library.

Resources and references

Animating the Unanimatable by Josh W. Comeau

Build performant expand & collapse animations by Paul Lewis and Stephen McGruer

The Magic Inside Magic Motion by Matt Perry

Using animate CSS variables from JavaScript, tweeted by @keyframers

Inside look at modern web browser (part 3) by Mariko Kosaka

Building a Complex UI Animation in React, Simply by Alex Holachek

Animating Layouts with the FLIP Technique by David Khourshid

Smooth animations with React Hooks, again by Kirill Vasiltsov

Shared element transition with React Hooks by Jayant Bhawal

The post Everything You Need to Know About FLIP Animations in React appeared first on CSS-Tricks.

source https://css-tricks.com/everything-you-need-to-know-about-flip-animations-in-react/

from WordPress https://ift.tt/3d9089S via IFTTT

0 notes

Text

Introducing Expo AR: Three.js on ARKit

The newest release of Expo features an experimental release of the Expo Augmented Reality API for iOS. This enables the creation of AR scenes using just JavaScript with familiar libraries such as three.js, along with React Native for user interfaces and Expo’s native APIs for geolocation and other device features. Check out the below video to see it in action!

youtube

Play the demo on Expo here! You’ll need an ARKit-compatible device to run it. The demo uses three.js for graphics, cannon.js for real-time physics, and Expo’s Audio API to play the music. You can summon the ducks toward you by touching the screen. The sound changes when you go underwater. You can find the source code for the demo on GitHub.

We’ll walk through the creation of a basic AR app from scratch in this blog post. You can find the resulting app on Snack here, where you can edit the code in the browser and see changes immediately using the Expo app on your phone! We’ll keep this app really simple so you can see how easy it is to get AR working, but all of the awesome features of three.js will be available to you to expand your app later. For further control you can use Expo’s OpenGL API directly to perform your own custom rendering.

Making a basic three.js app

First let’s make a three.js app that doesn’t use AR. Create a new Expo project with the “Blank” template (check out the Up and Running guide in the Expo documentation if you haven’t done this before — it suggests the “Tab Navigation” template but we’ll go with the “Blank” one). Make sure you can open it with Expo on your phone, it should look like this:

Update to the latest version of the expo library:

npm update expo

Make sure its version is at least 21.0.2.

Now let’s add the expo-three and three.js libraries to our projects. Run the following command to install them from npm:

npm i -S three expo-three

Import them at the top of App.js as follows:

import * as THREE from 'three'; import ExpoTHREE from 'expo-three';

Now let’s add a full-screenExpo.GLView in which we will render with three.js. First, import Expo:

import Expo from 'expo';

Then replace render() in the App component with:

render() return ( <Expo.GLView style= /> );

This should make the app turn into just a white screen. That’s what an Expo.GLView shows by default. Let’s get some three.js in there! First, let’s add anonContextCreate callback for the Expo.GLView, this is where we receive a gl object to do graphics stuff with:

render() return ( <Expo.GLView style= onContextCreate=this._onGLContextCreate /> );

_onGLContextCreate = async (gl) => // Do graphics stuff here!

We’ll mirror the introductory three.js tutorial, except using modern JavaScript and using expo-three’s utility function to create an Expo-compatible three.js renderer. That tutorial explains the meaning of each three.js concept we’ll use pretty well. So you can use the code from here and read the text there! All of the following code will go into the _onGLContextCreate function.

First we’ll add a scene, a camera and a renderer:

const scene = new THREE.Scene(); const camera = new THREE.PerspectiveCamera( 75, gl.drawingBufferWidth / gl.drawingBufferHeight, 0.1, 1000);

const renderer = ExpoTHREE.createRenderer( gl ); renderer.setSize(gl.drawingBufferWidth, gl.drawingBufferHeight);

This code reflects that in the three.js tutorial, except we don’t use window (a web concept) to get the size of the renderer, and we also don’t need to attach the renderer to any document (again a web concept) — the Expo.GLView is already attached!

The next step is the same as from the three.js tutorial:

const geometry = new THREE.BoxGeometry(1, 1, 1); const material = new THREE.MeshBasicMaterial( color: 0x00ff00 ); const cube = new THREE.Mesh(geometry, material); scene.add(cube);

camera.position.z = 5;

Now let’s write the main loop. This is again the same as from the three.js tutorial, except Expo.GLView’s gl object requires you to signal the end of a frame explicitly:

const animate = () => requestAnimationFrame(animate); renderer.render(scene, camera); gl.endFrameEXP(); animate();

Now if you refresh your app you should see a green square, which is just what a green cube would look like from one side:

Oh and also you may have noticed the warnings. We don’t need those extensions for this app (and for most apps), but they still pop up, so let’s disable the yellow warning boxes for now. Put this after your import statements at the top of the file:

console.disableYellowBox = true;

Let’s get that cube dancing! In the animate function we wrote earlier, before the renderer.render(…) line, add the following:

cube.rotation.x += 0.07; cube.rotation.y += 0.04;

This just updates the rotation of the cube every frame, resulting in a rotating cube:

You have a basic three.js app ready now… So let’s put it in AR!

Adding AR

First, we create an AR session for the lifetime of the app. Expo needs to access your devices camera and other hardware facilities to provide AR tracking information and the live video feed, and it tracks the state of this access using the AR session. An AR session is created with the Expo.GLView.startARSession() function. Let’s do this in _onGLContextCreate before all our three.js code. Since we need to call this on our Expo.GLView, we save a ref to it and make the call:

render() return ( <Expo.GLView ref=(ref) => this._glView = ref style= onContextCreate=this._onGLContextCreate /> );

_onGLContextCreate = async (gl) => { const arSession = await this._glView.startARSessionAsync();

...

Now we are ready to show the live background behind the 3d scene! ExpoTHREE.createARBackgroundTexture(arSession, renderer) is an expo-three utility that returns a THREE.Texture that live-updates to display the current AR background frame. We can just set the scene’s .background to it and it’ll display behind our scene. Since this needs the renderer to be passed in, we added it after the renderer creation line:

scene.background = ExpoTHREE.createARBackgroundTexture(arSession, renderer);

This should give you the live camera feed behind the cube:

You may notice though that this doesn’t quite get us to AR yet: we have the live background behind the cube but the cube still isn’t being positioned to reflect the device’s position in real life. We have one little step left: using an expo-three AR camera instead of three.js’s default PerspectiveCamera. We’ll use the ExpoTHREE.createARCamera(arSession, width, height, near, far) to create the camera instead of what we already have. Replace the current camera initialization with:

const camera = ExpoTHREE.createARCamera( arSession, gl.drawingBufferWidth, gl.drawingBufferHeight, 0.01, 1000 );

Currently we position the cube at (0, 0, 0) and move the camera back to see it. Instead, we’ll keep the camera position unaffected (since it is now updated in real-time to reflect AR tracking) and move the cube forward a bit. Remove the camera.position.z = 5; line and add cube.position.z = -0.4; after the cube creation (but before the main loop). Also, let’s scale the cube down to a side of 0.07 to reflect the AR coordinate space’s units. In the end, your code from scene creation to cube creation should now look as follows:

const scene = new THREE.Scene(); const camera = ExpoTHREE.createARCamera( arSession, gl.drawingBufferWidth, gl.drawingBufferHeight, 0.01, 1000 ); const renderer = ExpoTHREE.createRenderer( gl ); renderer.setSize(gl.drawingBufferWidth, gl.drawingBufferHeight);

scene.background = ExpoTHREE.createARBackgroundTexture(arSession, renderer);

const geometry = new THREE.BoxGeometry(0.07, 0.07, 0.07); const material = new THREE.MeshBasicMaterial( color: 0x00ff00 ); const cube = new THREE.Mesh(geometry, material); cube.position.z = -0.4; scene.add(cube);

This should give you a cube suspended in AR:

And there you go: you now have a basic Expo AR app ready!

Links

To recap, here are some links to useful resources from this tutorial:

The basic AR app on Snack, where you can edit it in the browser

The source code for the “Dire Dire Ducks!” demo on Github

The Expo page for the “Dire Dire Ducks!” demo, lets you play it on Expo immediately

Have fun and hope you make cool things! 🙂

Had to include this picture to get a cool thumbnail for the blog post…

Introducing Expo AR: Three.js on ARKit was originally published in Exposition on Medium, where people are continuing the conversation by highlighting and responding to this story.

via React Native on Medium http://ift.tt/2xmNejL

Via https://reactsharing.com/introducing-expo-ar-three-js-on-arkit-4.html

0 notes

Text

Everything You Need to Know About FLIP Animations in React

With a very recent Safari update, Web Animations API (WAAPI) is now supported without a flag in all modern browsers (except IE). Here’s a handy Pen where you can check which features your browser supports. The WAAPI is a nice way to do animation (that needs to be done in JavaScript) because it’s native — meaning it requires no additional libraries to work. If you’re completely new to WAAPI, here’s a very good introduction by Dan Wilson.

One of the most efficient approaches to animation is FLIP. FLIP requires a bit of JavaScript to do its thing.

Let’s take a look at the intersection of using the WAAPI, FLIP, and integrating all that into React. But we’ll start without React first, then get to that.

FLIP and WAAPI

FLIP animations are made much easier by the WAAPI!

Quick refresher on FLIP: The big idea is that you position the element where you want it to end up first. Next, apply transforms to move it to the starting position. Then unapply those transforms.

Animating transforms is super efficient, thus FLIP is super efficient. Before WAAPI, we had to directly manipulate element’s styles to set transforms and wait for the next frame to unset/invert it:

// FLIP Before the WAAPI el.style.transform = `translateY(200px)`;

requestAnimationFrame(() => { el.style.transform = ''; });

A lot of libraries are built upon this approach. However, there are several problems with this:

Everything feels like a huge hack.

It is extremely difficult to reverse the FLIP animation. While CSS transforms are reversed “for free” once a class is removed, this is not the case here. Starting a new FLIP while a previous one is running can cause glitches. Reversing requires parsing a transform matrix with getComputedStyles and using it to calculate the current dimensions before setting a new animation.

Advanced animations are close to impossible. For example, to prevent distorting a scaled parent’s children, we need to have access to current scale value each frame. This can only be done by parsing the transform matrix.

There’s lots of browser gotchas. For example, sometimes getting a FLIP animation to work flawlessly in Firefox requires calling requestAnimationFrame twice:

requestAnimationFrame(() => { requestAnimationFrame(() => { el.style.transform = ''; }); });

We get none of these problems when WAAPI is used. Reversing can be painlessly done with the reverse function.The counter-scaling of children is also possible. And when there is a bug, it is easy to pinpoint the exact culprit since we’re only working with simple functions, like animate and reverse, rather than combing through things like the requestAnimationFrame approach.

Here’s the outline of the WAAPI version:

el.classList.toggle('someclass'); const keyframes = /* Calculate the size/position diff */; el.animate(keyframes, 2000);

FLIP and React

To understand how FLIP animations work in React, it is important to know how and, most importantly, why they work in plain JavaScript. Recall the anatomy of a FLIP animation:

Everything that has a purple background needs to happen before the “paint” step of rendering. Otherwise, we would see a flash of new styles for a moment which is not good. Things get a little bit more complicated in React since all DOM updates are done for us.

The magic of FLIP animations is that an element is transformed before the browser has a chance to paint. So how do we know the “before paint” moment in React?

Meet the useLayoutEffect hook. If you even wondered what is for… this is it! Anything we pass in this callback happens synchronously after DOM updates but before paint. In other words, this is a great place to set up a FLIP!

Let us do something the FLIP technique is very good for: animating the DOM position. There’s nothing CSS can do if we want to animate how an element moves from one DOM position to another. (Imagine completing a task in a to-do list and moving it to the list of “completed” tasks like when you click on items in the Pen below.)

CodePen Embed Fallback

Let’s look at the simplest example. Clicking on any of the two squares in the following Pen makes them swap positions. Without FLIP, it would happen instantly.

CodePen Embed Fallback

There’s a lot going on there. Notice how all work happens inside lifecycle hook callbacks: useEffect and useLayoutEffect. What makes it a little bit confusing is that the timeline of our FLIP animation is not obvious from code alone since it happens across two React renders. Here’s the anatomy of a React FLIP animation to show the different order of operations:

Although useEffect always runs after useLayoutEffect and after browser paint, it is important that we cache the element’s position and size after the first render. We won’t get a chance to do it on second render because useLayoutEffect is run after all DOM updates. But the procedure is essentially the same as with vanilla FLIP animations.

Caveats

Like most things, there are some caveats to consider when working with FLIP in React.

Keep it under 100ms

A FLIP animation is calculation. Calculation takes time and before you can show that smooth 60fps transform you need to do quite some work. People won’t notice a delay if it is under 100ms, so make sure everything is below that. The Performance tab in DevTools is a good place to check that.

Unnecessary renders

We can’t use useState for caching size, positions and animation objects because every setState will cause an unnecessary render and slow down the app. It can even cause bugs in the worst of cases. Try using useRef instead and think of it as an object that can be mutated without rendering anything.

Layout thrashing

Avoid repeatedly triggering browser layout. In the context of FLIP animations, that means avoid looping through elements and reading their position with getBoundingClientRect, then immediately animating them with animate. Batch “reads” and “writes” whenever possible. This will allow for extremely smooth animations.

Animation canceling

Try randomly clicking on the squares in the earlier demo while they move, then again after they stop. You will see glitches. In real life, users will interact with elements while they move, so it’s worth making sure they are canceled, paused, and updated smoothly.

However, not all animations can be reversed with reverse. Sometimes, we want them to stop and then move to a new position (like when randomly shuffling a list of elements). In this case, we need to:

obtain a size/position of a moving element

finish the current animation

calculate the new size and position differences

start a new animation

In React, this can be harder than it seems. I wasted a lot of time struggling with it. The current animation object must be cached. A good way to do it is to create a Map so to get the animation by an ID. Then, we need to obtain the size and position of the moving element. There are two ways to do it:

Use a function component: Simply loop through every animated element right in the body of the function and cache the current positions.

Use a class component: Use the getSnapshotBeforeUpdate lifecycle method.

In fact, official React docs recommend using getSnapshotBeforeUpdate “because there may be delays between the “render” phase lifecycles (like render) and “commit” phase lifecycles (like getSnapshotBeforeUpdate and componentDidUpdate).” However, there is no hook counterpart of this method yet. I found that using the body of the function component is fine enough.

Don’t fight the browser

I’ve said it before, but avoid fighting the browser and try to make things happen the way the browser would do it. If we need to animate a simple size change, then consider whether CSS would suffice (e.g. transform: scale()) . I’ve found that FLIP animations are used best where browsers really can’t help:

Animating DOM position change (as we did above)

Sharing layout animations

The second is a more complicated version of the first. There are two DOM elements that act and look as one changing its position (while another is unmounted/hidden). This tricks enables some cool animations. For example, this animation is made with a library I built called react-easy-flip that uses this approach:

CodePen Embed Fallback

Libraries

There are quite a few libraries that make FLIP animations in React easier and abstract the boilerplate. Ones that are currently maintained actively include: react-flip-toolkit and mine, react-easy-flip.

If you do not mind something heavier but capable of more general animations, check out framer-motion. It also does cool shared layout animations! There is a video digging into that library.

Resources and references

Animating the Unanimatable by Josh W. Comeau

Build performant expand & collapse animations by Paul Lewis and Stephen McGruer

The Magic Inside Magic Motion by Matt Perry

Using animate CSS variables from JavaScript, tweeted by @keyframers

Inside look at modern web browser (part 3) by Mariko Kosaka

Building a Complex UI Animation in React, Simply by Alex Holachek

Animating Layouts with the FLIP Technique by David Khourshid

Smooth animations with React Hooks, again by Kirill Vasiltsov

Shared element transition with React Hooks by Jayant Bhawal

The post Everything You Need to Know About FLIP Animations in React appeared first on CSS-Tricks.

Everything You Need to Know About FLIP Animations in React published first on https://deskbysnafu.tumblr.com/

0 notes

Photo

Great weekend watching with the Vue.js documentary

#477 — February 28, 2020

Unsubscribe : Read on the Web

JavaScript Weekly

▶ Vue.js: The Documentary — A well produced 30 minute documentary (from the creators of the previously popular Ember.js documentary) focused on Evan You, the development of Vue.js, its position in our ecosystem, and the userbase.

Honeypot

Rome: A New Experimental JavaScript Toolchain from Facebook — Includes a compiler, linter, formatter, bundler, and testing framework (these are all new and not existing tools) and aims to be a comprehensive tool for anything related to the processing of JavaScript code. It comes from Sebastian McKenzie, one of the creators of both Babel and Yarn.

Facebook Experimental

Master State Management with Redux & Typescript at ForwardJS — Join us for a full day in-depth React workshop at ForwardJS Ottawa. Further talks touch on TypeScript, containers, design systems, static sites, scaling teams and monorepos.

ForwardJS sponsor

How Autotracking Works — This is really interesting! It’s a truly deep dive into Ember’s new reactivity system but is applicable to your thinking as a JavaScript developer generally. Autotracking, at its core, is about tracking the values that are used during a computation so that computation can be memoized. Lots to learn here.

Chris Garrett

V8 v8.1's Intl.DisplayNames — Another six weeks have passed so there’s another version of the V8 JavaScript engine that underpins Chrome and Node. In 8.1 we gain a Intl.DisplayNames method for displaying translated names of languages, regions, written scripts and currencies. More detail here.

Dominik Inführ

React v16.13.0 Released — Mostly a release for bugfixes and new deprecation warnings to help prepare for a future major release.

⚡️ Quick Releases

Snowpack 1.5.0 — Compile-time dependency arranger gets even faster.

Vue.js 3.0.0 alpha 7 — The big 3.0 is still on the way :-)

Ava 3.4 — Popular Node testing system.

Normalizr 3.6 — Schema-driven nested JSON normalizer.

Mocha 7.1 — Popular test framework gets native ES module support on Node.

💻 Jobs

Find a Dev Job Through Vettery — Vettery is completely free for job seekers. Make a profile, name your salary, and connect with hiring managers from top employers.

Vettery

Lead Server-Side Developer (Sydney or Remote across AUS/NZ) — Build back-end frameworks server side software (Express.js + MongoDB + GraphQL) and write server-side code (JavaScript, Node.js).

Compono

ℹ️ If you're interested in running a job listing in JavaScript Weekly, there's more info here.

📘 Articles & Tutorials

Metrics, Logs, and Traces in JavaScript Tools — A look at the different types of useful metrics available to JavaScript developers.

Shawn Wang

You've Got to Make Your Test Fail — Tests are great, but they need to (initially) fail! “If you’re not careful you can write a test that’s worse than having not tests at all.”

Kent C Dodds

Interactive Lab: Build a JS+Python Serverless Application — Join IBM's Upkar Lidder and learn how to build a cloud-native Visual Recognition service.

IBM Developer sponsor

How to Use requestAnimationFrame() with Vanilla JS — If you’ve never really had a use for this feature, but want to know how it works, this is easy to follow with some simple demos.

Chris Ferdinandi

Getting Started with Ember Octane: Building a Blog — We haven’t linked an Ember framework tutorial for a while and this is a neat one.

Frank Treacy

Automated Headless Browser Scripts in Node with Puppeteer — A walk-through on how to use Puppeteer to write scripts to interact with web pages programmatically. The example project is based on a native lands location API.

Sam Agnew

The Mindset of Component Composition in Vue — A step-by-step tutorial building a search bar component. Good for those already familiar with Vue but maybe want to see another developer’s perspective on component composition.

Marina Mosti

Getting Started with Vuex: A Beginners Guide — This claims to be a “brief” tutorial, but there’s lots of meat here for those looking to understand Vuex, the state management solution for Vue.js apps.

codesource.io

Web Rebels Conference - CFP Ends 1st of March — Web Rebels is back on the 14-15th of May 2020 in Oslo, Norway. Submit to our CFP or get a ticket and join us for two days of JS.

Web Rebels Conference sponsor

What is a Type in TypeScript? Two Perspectives — Describes two perspectives (types as sets of values and type compatibility relationships) to help understand types in TypeScript.

Axel Rauschmayer

▶ Building a Reusable Pagination Component in Vue.js

Jeffrey Biles

How to Quickly Scaffold and Architect A New Angular App

Tomas Trajan

🔧 Code & Tools

Scala.js 1.0: A Scala to JavaScript Transpiler — An alternative way to build robust front-end web applications in Scala.

Scala.js

exifr: A Fast, Versatile JS EXIF Reading Library — Exif (EXchangeable Image File Format) is a common metadata format embedded into image and other media files. More here, including examples.

Mike Kovařík

React Query 1.0: Hooks for Fetching, Caching and Updating Data — Hooks that help you keep your server cache state separate from your global state and let you read and update everything asynchronously. There’s a lot to enjoy here.

Tanner Linsley

Electron React Boilerplate 1.0: A Foundation for Scalable Cross-Platform Apps — Brings together Electron (the popular cross-platform desktop app development toolkit) with React, Redux, React Router, webpack and React Hot Loader. v1.0 completes its migration to TypeScript.

Electron React Boilerplate

Optimize End User Experience in Real Time with Real User Monitoring

Site24x7 sponsor

date-fns 2.10: It's Like lodash But For Dates — A popular date utility library that provides an extensive and consistent API for manipulating dates. v2.10.0 has just dropped.

date-fns

Panolens.js: A JavaScript Panorama Viewer Based on Three.js — View examples here. This is a lightweight, flexible, WebGL-based panorama viewer built on top of Three.js.

Ray Chen

Git for Node and the Browser using libgit Compiled to WASM — Naturally, this is a rather experimental idea(!) There is a browser based demo if you’re interested though.

Peter Salomonsen

by via JavaScript Weekly https://ift.tt/3addnFv

0 notes

Text

Introducing Expo AR: Three.js on ARKit

The newest release of Expo features an experimental release of the Expo Augmented Reality API for iOS. This enables the creation of AR scenes using just JavaScript with familiar libraries such as three.js, along with React Native for user interfaces and Expo’s native APIs for geolocation and other device features. Check out the below video to see it in action!

youtube

Play the demo on Expo here! You’ll need an ARKit-compatible device to run it. The demo uses three.js for graphics, cannon.js for real-time physics, and Expo’s Audio API to play the music. You can summon the ducks toward you by touching the screen. The sound changes when you go underwater. You can find the source code for the demo on GitHub.

We’ll walk through the creation of a basic AR app from scratch in this blog post. You can find the resulting app on Snack here, where you can edit the code in the browser and see changes immediately using the Expo app on your phone! We’ll keep this app really simple so you can see how easy it is to get AR working, but all of the awesome features of three.js will be available to you to expand your app later. For further control you can use Expo’s OpenGL API directly to perform your own custom rendering.

Making a basic three.js app

First let’s make a three.js app that doesn’t use AR. Create a new Expo project with the “Blank” template (check out the Up and Running guide in the Expo documentation if you haven’t done this before — it suggests the “Tab Navigation” template but we’ll go with the “Blank” one). Make sure you can open it with Expo on your phone, it should look like this:

Update to the latest version of the expo library:

npm update expo

Make sure its version is at least 21.0.2.

Now let’s add the expo-three and three.js libraries to our projects. Run the following command to install them from npm:

npm i -S three expo-three

Import them at the top of App.js as follows:

import * as THREE from 'three'; import ExpoTHREE from 'expo-three';

Now let’s add a full-screenExpo.GLView in which we will render with three.js. First, import Expo:

import Expo from 'expo';

Then replace render() in the App component with:

render() return ( <Expo.GLView style= /> );

This should make the app turn into just a white screen. That’s what an Expo.GLView shows by default. Let’s get some three.js in there! First, let’s add anonContextCreate callback for the Expo.GLView, this is where we receive a gl object to do graphics stuff with:

render() return ( <Expo.GLView style= onContextCreate=this._onGLContextCreate /> );

_onGLContextCreate = async (gl) => // Do graphics stuff here!

We’ll mirror the introductory three.js tutorial, except using modern JavaScript and using expo-three’s utility function to create an Expo-compatible three.js renderer. That tutorial explains the meaning of each three.js concept we’ll use pretty well. So you can use the code from here and read the text there! All of the following code will go into the _onGLContextCreate function.

First we’ll add a scene, a camera and a renderer:

const scene = new THREE.Scene(); const camera = new THREE.PerspectiveCamera( 75, gl.drawingBufferWidth / gl.drawingBufferHeight, 0.1, 1000);

const renderer = ExpoTHREE.createRenderer( gl ); renderer.setSize(gl.drawingBufferWidth, gl.drawingBufferHeight);

This code reflects that in the three.js tutorial, except we don’t use window (a web concept) to get the size of the renderer, and we also don’t need to attach the renderer to any document (again a web concept) — the Expo.GLView is already attached!

The next step is the same as from the three.js tutorial:

const geometry = new THREE.BoxGeometry(1, 1, 1); const material = new THREE.MeshBasicMaterial( color: 0x00ff00 ); const cube = new THREE.Mesh(geometry, material); scene.add(cube);

camera.position.z = 5;

Now let’s write the main loop. This is again the same as from the three.js tutorial, except Expo.GLView’s gl object requires you to signal the end of a frame explicitly:

const animate = () => requestAnimationFrame(animate); renderer.render(scene, camera); gl.endFrameEXP(); animate();

Now if you refresh your app you should see a green square, which is just what a green cube would look like from one side:

Oh and also you may have noticed the warnings. We don’t need those extensions for this app (and for most apps), but they still pop up, so let’s disable the yellow warning boxes for now. Put this after your import statements at the top of the file:

console.disableYellowBox = true;

Let’s get that cube dancing! In the animate function we wrote earlier, before the renderer.render(…) line, add the following:

cube.rotation.x += 0.07; cube.rotation.y += 0.04;

This just updates the rotation of the cube every frame, resulting in a rotating cube:

You have a basic three.js app ready now… So let’s put it in AR!

Adding AR

First, we create an AR session for the lifetime of the app. Expo needs to access your devices camera and other hardware facilities to provide AR tracking information and the live video feed, and it tracks the state of this access using the AR session. An AR session is created with the Expo.GLView.startARSession() function. Let’s do this in _onGLContextCreate before all our three.js code. Since we need to call this on our Expo.GLView, we save a ref to it and make the call:

render() return ( <Expo.GLView ref=(ref) => this._glView = ref style= onContextCreate=this._onGLContextCreate /> );

_onGLContextCreate = async (gl) => { const arSession = await this._glView.startARSessionAsync();

...

Now we are ready to show the live background behind the 3d scene! ExpoTHREE.createARBackgroundTexture(arSession, renderer) is an expo-three utility that returns a THREE.Texture that live-updates to display the current AR background frame. We can just set the scene’s .background to it and it’ll display behind our scene. Since this needs the renderer to be passed in, we added it after the renderer creation line:

scene.background = ExpoTHREE.createARBackgroundTexture(arSession, renderer);

This should give you the live camera feed behind the cube:

You may notice though that this doesn’t quite get us to AR yet: we have the live background behind the cube but the cube still isn’t being positioned to reflect the device’s position in real life. We have one little step left: using an expo-three AR camera instead of three.js’s default PerspectiveCamera. We’ll use the ExpoTHREE.createARCamera(arSession, width, height, near, far) to create the camera instead of what we already have. Replace the current camera initialization with:

const camera = ExpoTHREE.createARCamera( arSession, gl.drawingBufferWidth, gl.drawingBufferHeight, 0.01, 1000 );

Currently we position the cube at (0, 0, 0) and move the camera back to see it. Instead, we’ll keep the camera position unaffected (since it is now updated in real-time to reflect AR tracking) and move the cube forward a bit. Remove the camera.position.z = 5; line and add cube.position.z = -0.4; after the cube creation (but before the main loop). Also, let’s scale the cube down to a side of 0.07 to reflect the AR coordinate space’s units. In the end, your code from scene creation to cube creation should now look as follows:

const scene = new THREE.Scene(); const camera = ExpoTHREE.createARCamera( arSession, gl.drawingBufferWidth, gl.drawingBufferHeight, 0.01, 1000 ); const renderer = ExpoTHREE.createRenderer( gl ); renderer.setSize(gl.drawingBufferWidth, gl.drawingBufferHeight);

scene.background = ExpoTHREE.createARBackgroundTexture(arSession, renderer);

const geometry = new THREE.BoxGeometry(0.07, 0.07, 0.07); const material = new THREE.MeshBasicMaterial( color: 0x00ff00 ); const cube = new THREE.Mesh(geometry, material); cube.position.z = -0.4; scene.add(cube);

This should give you a cube suspended in AR:

And there you go: you now have a basic Expo AR app ready!

Links

To recap, here are some links to useful resources from this tutorial:

The basic AR app on Snack, where you can edit it in the browser

The source code for the “Dire Dire Ducks!” demo on Github

The Expo page for the “Dire Dire Ducks!” demo, lets you play it on Expo immediately

Have fun and hope you make cool things! 🙂

Had to include this picture to get a cool thumbnail for the blog post…

Introducing Expo AR: Three.js on ARKit was originally published in Exposition on Medium, where people are continuing the conversation by highlighting and responding to this story.

via React Native on Medium http://ift.tt/2xmNejL

Via https://reactsharing.com/introducing-expo-ar-three-js-on-arkit-3.html

0 notes

Text

Introducing Expo AR: Three.js on ARKit

The newest release of Expo features an experimental release of the Expo Augmented Reality API for iOS. This enables the creation of AR scenes using just JavaScript with familiar libraries such as three.js, along with React Native for user interfaces and Expo’s native APIs for geolocation and other device features. Check out the below video to see it in action!

youtube

Play the demo on Expo here! You’ll need an ARKit-compatible device to run it. The demo uses three.js for graphics, cannon.js for real-time physics, and Expo’s Audio API to play the music. You can summon the ducks toward you by touching the screen. The sound changes when you go underwater. You can find the source code for the demo on GitHub.

We’ll walk through the creation of a basic AR app from scratch in this blog post. You can find the resulting app on Snack here, where you can edit the code in the browser and see changes immediately using the Expo app on your phone! We’ll keep this app really simple so you can see how easy it is to get AR working, but all of the awesome features of three.js will be available to you to expand your app later. For further control you can use Expo’s OpenGL API directly to perform your own custom rendering.

Making a basic three.js app

First let’s make a three.js app that doesn’t use AR. Create a new Expo project with the “Blank” template (check out the Up and Running guide in the Expo documentation if you haven’t done this before — it suggests the “Tab Navigation” template but we’ll go with the “Blank” one). Make sure you can open it with Expo on your phone, it should look like this:

Update to the latest version of the expo library:

npm update expo

Make sure its version is at least 21.0.2.

Now let’s add the expo-three and three.js libraries to our projects. Run the following command to install them from npm:

npm i -S three expo-three

Import them at the top of App.js as follows:

import * as THREE from 'three'; import ExpoTHREE from 'expo-three';

Now let’s add a full-screenExpo.GLView in which we will render with three.js. First, import Expo:

import Expo from 'expo';

Then replace render() in the App component with:

render() return ( <Expo.GLView style= /> );

This should make the app turn into just a white screen. That’s what an Expo.GLView shows by default. Let’s get some three.js in there! First, let’s add anonContextCreate callback for the Expo.GLView, this is where we receive a gl object to do graphics stuff with:

render() return ( <Expo.GLView style= onContextCreate=this._onGLContextCreate /> );

_onGLContextCreate = async (gl) => // Do graphics stuff here!

We’ll mirror the introductory three.js tutorial, except using modern JavaScript and using expo-three’s utility function to create an Expo-compatible three.js renderer. That tutorial explains the meaning of each three.js concept we’ll use pretty well. So you can use the code from here and read the text there! All of the following code will go into the _onGLContextCreate function.

First we’ll add a scene, a camera and a renderer:

const scene = new THREE.Scene(); const camera = new THREE.PerspectiveCamera( 75, gl.drawingBufferWidth / gl.drawingBufferHeight, 0.1, 1000);

const renderer = ExpoTHREE.createRenderer( gl ); renderer.setSize(gl.drawingBufferWidth, gl.drawingBufferHeight);

This code reflects that in the three.js tutorial, except we don’t use window (a web concept) to get the size of the renderer, and we also don’t need to attach the renderer to any document (again a web concept) — the Expo.GLView is already attached!

The next step is the same as from the three.js tutorial:

const geometry = new THREE.BoxGeometry(1, 1, 1); const material = new THREE.MeshBasicMaterial( color: 0x00ff00 ); const cube = new THREE.Mesh(geometry, material); scene.add(cube);

camera.position.z = 5;

Now let’s write the main loop. This is again the same as from the three.js tutorial, except Expo.GLView’s gl object requires you to signal the end of a frame explicitly:

const animate = () => requestAnimationFrame(animate); renderer.render(scene, camera); gl.endFrameEXP(); animate();

Now if you refresh your app you should see a green square, which is just what a green cube would look like from one side:

Oh and also you may have noticed the warnings. We don’t need those extensions for this app (and for most apps), but they still pop up, so let’s disable the yellow warning boxes for now. Put this after your import statements at the top of the file:

console.disableYellowBox = true;

Let’s get that cube dancing! In the animate function we wrote earlier, before the renderer.render(…) line, add the following:

cube.rotation.x += 0.07; cube.rotation.y += 0.04;

This just updates the rotation of the cube every frame, resulting in a rotating cube:

You have a basic three.js app ready now… So let’s put it in AR!

Adding AR

First, we create an AR session for the lifetime of the app. Expo needs to access your devices camera and other hardware facilities to provide AR tracking information and the live video feed, and it tracks the state of this access using the AR session. An AR session is created with the Expo.GLView.startARSession() function. Let’s do this in _onGLContextCreate before all our three.js code. Since we need to call this on our Expo.GLView, we save a ref to it and make the call:

render() return ( <Expo.GLView ref=(ref) => this._glView = ref style= onContextCreate=this._onGLContextCreate /> );

_onGLContextCreate = async (gl) => { const arSession = await this._glView.startARSessionAsync();

...

Now we are ready to show the live background behind the 3d scene! ExpoTHREE.createARBackgroundTexture(arSession, renderer) is an expo-three utility that returns a THREE.Texture that live-updates to display the current AR background frame. We can just set the scene’s .background to it and it’ll display behind our scene. Since this needs the renderer to be passed in, we added it after the renderer creation line:

scene.background = ExpoTHREE.createARBackgroundTexture(arSession, renderer);

This should give you the live camera feed behind the cube:

You may notice though that this doesn’t quite get us to AR yet: we have the live background behind the cube but the cube still isn’t being positioned to reflect the device’s position in real life. We have one little step left: using an expo-three AR camera instead of three.js’s default PerspectiveCamera. We’ll use the ExpoTHREE.createARCamera(arSession, width, height, near, far) to create the camera instead of what we already have. Replace the current camera initialization with:

const camera = ExpoTHREE.createARCamera( arSession, gl.drawingBufferWidth, gl.drawingBufferHeight, 0.01, 1000 );

Currently we position the cube at (0, 0, 0) and move the camera back to see it. Instead, we’ll keep the camera position unaffected (since it is now updated in real-time to reflect AR tracking) and move the cube forward a bit. Remove the camera.position.z = 5; line and add cube.position.z = -0.4; after the cube creation (but before the main loop). Also, let’s scale the cube down to a side of 0.07 to reflect the AR coordinate space’s units. In the end, your code from scene creation to cube creation should now look as follows:

const scene = new THREE.Scene(); const camera = ExpoTHREE.createARCamera( arSession, gl.drawingBufferWidth, gl.drawingBufferHeight, 0.01, 1000 ); const renderer = ExpoTHREE.createRenderer( gl ); renderer.setSize(gl.drawingBufferWidth, gl.drawingBufferHeight);

scene.background = ExpoTHREE.createARBackgroundTexture(arSession, renderer);

const geometry = new THREE.BoxGeometry(0.07, 0.07, 0.07); const material = new THREE.MeshBasicMaterial( color: 0x00ff00 ); const cube = new THREE.Mesh(geometry, material); cube.position.z = -0.4; scene.add(cube);

This should give you a cube suspended in AR:

And there you go: you now have a basic Expo AR app ready!

Links

To recap, here are some links to useful resources from this tutorial:

The basic AR app on Snack, where you can edit it in the browser

The source code for the “Dire Dire Ducks!” demo on Github

The Expo page for the “Dire Dire Ducks!” demo, lets you play it on Expo immediately

Have fun and hope you make cool things! 🙂

Had to include this picture to get a cool thumbnail for the blog post…

Introducing Expo AR: Three.js on ARKit was originally published in Exposition on Medium, where people are continuing the conversation by highlighting and responding to this story.

via React Native on Medium http://ift.tt/2xmNejL

Via https://reactsharing.com/introducing-expo-ar-three-js-on-arkit-2.html

0 notes

Text

Introducing Expo AR: Three.js on ARKit

The newest release of Expo features an experimental release of the Expo Augmented Reality API for iOS. This enables the creation of AR scenes using just JavaScript with familiar libraries such as three.js, along with React Native for user interfaces and Expo’s native APIs for geolocation and other device features. Check out the below video to see it in action!

youtube

Play the demo on Expo here! You’ll need an ARKit-compatible device to run it. The demo uses three.js for graphics, cannon.js for real-time physics, and Expo’s Audio API to play the music. You can summon the ducks toward you by touching the screen. The sound changes when you go underwater. You can find the source code for the demo on GitHub.

We’ll walk through the creation of a basic AR app from scratch in this blog post. You can find the resulting app on Snack here, where you can edit the code in the browser and see changes immediately using the Expo app on your phone! We’ll keep this app really simple so you can see how easy it is to get AR working, but all of the awesome features of three.js will be available to you to expand your app later. For further control you can use Expo’s OpenGL API directly to perform your own custom rendering.

Making a basic three.js app

First let’s make a three.js app that doesn’t use AR. Create a new Expo project with the “Blank” template (check out the Up and Running guide in the Expo documentation if you haven’t done this before — it suggests the “Tab Navigation” template but we’ll go with the “Blank” one). Make sure you can open it with Expo on your phone, it should look like this:

Update to the latest version of the expo library:

npm update expo

Make sure its version is at least 21.0.2.

Now let’s add the expo-three and three.js libraries to our projects. Run the following command to install them from npm:

npm i -S three expo-three

Import them at the top of App.js as follows:

import * as THREE from 'three'; import ExpoTHREE from 'expo-three';

Now let’s add a full-screenExpo.GLView in which we will render with three.js. First, import Expo:

import Expo from 'expo';

Then replace render() in the App component with:

render() return ( <Expo.GLView style= /> );

This should make the app turn into just a white screen. That’s what an Expo.GLView shows by default. Let’s get some three.js in there! First, let’s add anonContextCreate callback for the Expo.GLView, this is where we receive a gl object to do graphics stuff with:

render() return ( <Expo.GLView style= onContextCreate=this._onGLContextCreate /> );

_onGLContextCreate = async (gl) => // Do graphics stuff here!